Identifying the Problem

One issue I would like to tackle in the world is the over-abundance of what I will call recommendation AI; specifically, artificial intelligence technologies used by Facebook, Google, and Twitter that are used to make suggestions on content/media for users to view. This technology has a tendency to be harmful because a lot of users these days are unable to make their own decisions as to what kind of media they consume.

Why this Problem is Important to Tackle

This problem is important to me because it is blatantly malicious in intent. I know far too many people who fall prey to this type of technology. It is very sad to see how polarized people can become on either side of the political spectrum, and about many everyday things in general. I know that if this AI was not interfering with what digital content we were consuming, people would be freer to make their own decisions about what media they consumed online. I feel as though with the rise of the flat earth movement, alt-right /extreme left thinkings, and obsession for dangerous trends, it is important for everyone to recognize this problem and work for change. I very much value freedom of choice, freedom of speech, and freedom in general. With how the current recommendation system is, however, freedom is very much being stifled in that people are not free to make their own decisions because a computer has already made their decisions for them. Before diving into the problem itself, there is some background information that is necessary to understand first.

Background Information on the Issue

The term “sheep” has been prevalent in many forms of media lately. “Sheep” is typically used to describe people who will blindly follow and listen to any information they receive. “A meek, unimaginative, or easily led person” is the more specific definition from dictionary.com. The term has negative connotations to imply that those in the general public are ignorant and incapable of acting on their own independent thoughts/decisions – much like a herd of sheep. There are two major weaknesses that make up a “Sheep”: lack of digital literacy and a need to fulfill one’s confirmation bias.

Digital literacy is one’s ability to search for, evaluate, and create information via digital platforms. Evolving from media literacy, digital literacy is a very necessary tool in navigating the world we live in today. (Wikipedia) In most locations with access to digital mediums and the internet, digital literacy is now becoming an additional portion of children’s educations. However, teaching digital literacy cannot guarantee everyone will gain this skill; especially amongst older generations.

Confirmation bias occurs when a person believes a certain concept or idea is so true, that they take any and all information to confirm their beliefs. This makes people ignore or completely deny that any information that opposes their views exist. This trait is commonly prevalent in those who are highly anxious or have low self-esteem. (Psychology Today)

With the combination of a lack of digital literacy and strong confirmation bias, one can already start to piece together why current recommendation algorithms can cause more “sheep” to arise. Before we address the issue, let’s take a deeper dive into how these recommendation engines work and how AI is influencing the algorithms.

First, it is important to understand what a recommendation engine is:

“A recommendation engine is an information filtering system uploading information tailored to users’ interests, preferences, or behavioral history on an item. It is able to predict a specific user’s preference on an item based on their profile.” (Smart Hint)

On a base level, the recommendation engine sounds as though suggestions arise entirely from user data. So one could suggest that information presented by a recommendation is only what a user needs anyway. However, companies like YouTube utilize machine learning and artificial intelligence to further test possible recommendations without testing on you first. In the Center for Humane Technology’s video presentation “Inconvenient Truth for Tech” former Google employee Tristan Harris explains how recommendation AI works.

YouTube (Google) first takes in the data that you give them, based on what you have searched for in the past and anything you have clicked on that they have recommended to you. From that, a digital version of yourself is slowly built over time by an AI-powered algorithm. Even when you aren’t searching on google, that AI version of yourself is being thrown a variety of content. And that AI makes decisions on what to click/view in place of yourself. The goal is to over time become so much like you, that it will predict with extreme accuracy what recommended content you will view, so that YouTube will know exactly what content to show you. (Inconvenient Truth for Tech)

This is where the problem comes in. It turns out, this recommendation algorithm is too good at showing the users exactly what they want, because it had gotten to the point where people were recieving only the same content repeatedly, which was often eye-grabbing click-bait type content. For example, a young teenage girl who may search “diet” from the Youtube search bar, was often recommended eating disorder videos after. In fact, the algorithms also recommended and popularized conspiracy channels like Infowars, and topics like Anti-Vaxx and Flat Earth. (Inconvenient Truth for Tech)

AI used by YouTube has been designed to take advantage of the two human weaknesses, confirmation biases, and poor digital literacy, in order to keep users watching so that Google can make more money. Taking advantage of people’s weaknesses and tendencies to be “sheep” is highly immoral and wrong, especially for financial gain. This technology correlates with all six problems outlined by the Center for Human Technology: Digital Addiction, Mental Health, Breakdown of Truth, Polarization, Political Manipulation, and Superficiality. (Humane Tech)

The platform that I am going to be redesigning for the purpose of this project is YouTube. I use YouTube a lot and I have been using it since it first came into existence. I think it has a lot of potential as an entertainment source, information source, and education resource. So much content is on this platform and the fact that anyone can make YouTube videos makes the environment a great creative outlet for many people.

Of course, as I have begun to outline in the previous portion of my project, there are many issues with the Platform as well, revolving mostly around its recommendation AI but there also other concerns as well, from many minor concerns to other major ones. Let’s use the Center for Humane Technology’s Ledger of harms to analyze Youtube in its current state.

Attention

According to biographon.com, an average mobile session on youtube lasts more than 40 minutes. Youtube over 30 million visitors per day, many of which are viewing on mobile, for possibly multiple sessions per day. By this data, one can conclude that YouTube serves as a frequent distraction, harming people’s attention spans.

Mental Health

YouTube’s recommendations systems have been previously mentioned (above) to recommend potentially harmful content to those searching with more innocent intentions. Watching content that can trigger emotional responses in those who are susceptible can be extremely harmful to one’s mental health. Furthermore, Youtube user’s obsession with subscriber counts and likes can be further harmful to small-time creators who think that they will never be valid until they have as many subscribers as the mega-channels on the site.

Democracy

YouTube has definitely been a resource that has allowed the growth in very popular political propaganda and fake news channels. Recently, moderation has been taken more seriously on the channel and channels like Infowars are starting to be banned. In August 2019 Youtube had removed another Info-wars brand account (The Hill), indicating that Youtube is beginning to take a step forward in terms of taking in political content.

Children

This topic is sensitive to me because I do have a little sister that fell victim to strange, obsessive children’s videos. There is definitely content on YouTube that is very addicting to children and manipulates children’s sense of reality. However, this is an issue that is very difficult to explain, and understandably a difficult design challenge to concur. If interested, here are a few links that can better explain what the issue is than I:

https://www.youtube.com/watch?v=v9EKV2nSU8w

https://www.youtube.com/watch?v=NceTdz8KlYU

Redesigning YouTube:

For my redesign, I want to aim to tackle a few of the issues I’ve mentioned in the ledger of harm.

- Subscriber/Like Obsession

- Fake News

- Harmful Content

- Recommendation AI

These may not be all the problems that Youtube has, but seem to currently be the biggest concern.

Subscriber/Like Obsession

In my version of YouTube, videos and channels would not have a visible like count, view count or subscriber count. This information is something that is important for YouTube to run in terms of trending content and ad revenue, but it should not be important for users to view. (I would still allow users to subscribe to channels so that they could receive content they would prefer.) Instagram and Facebook are already considering to drop this feature for the well being of its users, (Search Engine Journal). YouTube is going to begin to truncate subscriber counts (The Verge), however, I think that YouTube would be better off making this information invisible to users and individual channels. This would even the playing field, and prevent its users from competing with each other for view counts.

Fake News and Harmful Content

YouTube has already been making a shift to remove accounts like Info Wars that promote Fake News, but moderating content that is uploaded at a rate of 300 hours per minute is no easy feat. (BiographON) Having people moderating the content can be quite unhealthy for workers, as a lot of the content moderators must view are violent and disturbing. Youtube has a system for tagging and taking down violating content, called Content ID identifies and removes copyrighted content. For violence, murder, suicide and child pornography, however, Youtube currently employs human moderators. (The Verge) It is evident to me that in my Redesign of Youtube, a lot more money would go into generating an AI that can sort between typical content and harmful content so that humans would not have to expose themselves to such horrific content on a daily basis. I also suggest any content reported by users must be mandatorily taken down until properly reviewed. (This would be a fail-safe in case the AI fails to recognize harmful content.)

Recommendation AI

I’m going to tackle this by saying get rid of the AI system and recommended videos entirely. Rather than having a recommended tab on the front page of the app/site, I would eliminate the recommended tab entirely, including the tab under the video you are watching. On the home page, I would have the first tab be a trending tab, created solely based on user data with no AI assistance, and then the subscribed tab that shows content from channels the user subscribes to. From videos that you have found via searching, recommendation videos will now show more video results from your previous search. This will be locked into recommending only videos from the prior search until the user either decides to stop watching videos or manually going to the search bar to search for a new category.

On a final note…

I’m going to get rid of ads for fun because I don’t care for them. Most YouTubers get their money from their own internal sponsors nowadays anyway.

What Stays the Same

Some important things I am going to keep the same with this redesign.

- Search system

- Comments

- Trending page

- The overall layout and general settings

- Dark-mode still exists (It’s easier on some people’s eyes)

- Watch-time restrictions can be manually set (people should still be able to choose how long they watch)

- Subsription-based services still exist

- Most anything else I missed

My Redesign

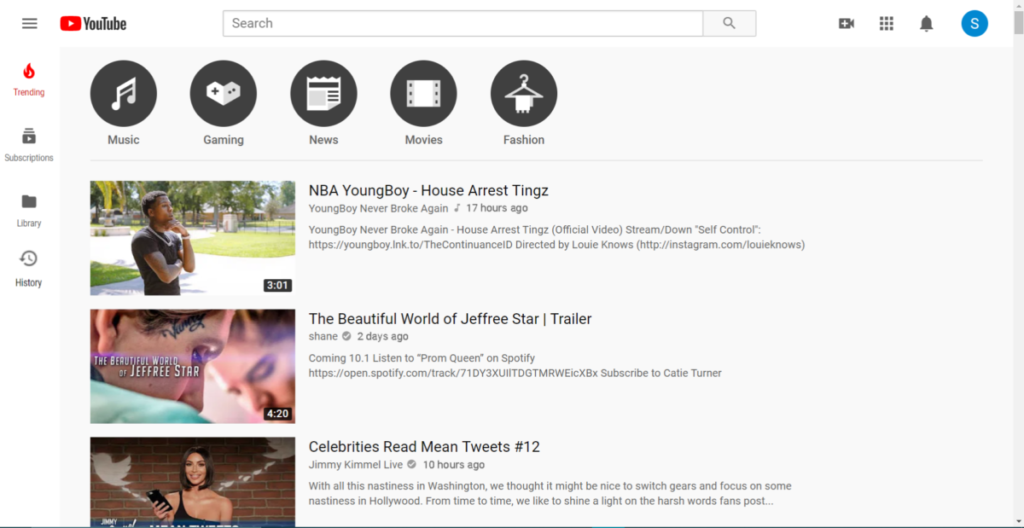

Here is some side by side comparisons between YouTube now (left) and my version of the platform (right):

Conclusion

These are only my initial thoughts and suggestions for changes to be made. Not all of the issues of YouTube can be corrected with Photoshop, but addressing these few concerns could potentially make the platform come a long way.

Sources (Links – proper bibliography to be added)

https://www.dictionary.com/browse/sheep?s=t

https://en.wikipedia.org/wiki/Digital_literacy

“Inconvenient Truth for Tech” (video) Center for Humane Technology

https://humanetech.com/problem/

https://biographon.com/youtube-stats/

https://www.searchenginejournal.com/facebook-might-follow-instagram-by-removing-like

https://www.theverge.com/2019/5/21/18634368/james-charles-tati-westbrook-tfue-subsc

riber-count-change-youtube-social-blade

https://www.theverge.com/2018/3/13/17117554/youtube-content-moderators-limit-four-h

You must be logged in to post a comment.